LLM’s basic learning theory

[toc]

Introduction to large language models

Large Language Model (LLM), also known as large language model, is an artificial intelligence model designed to understand and generate human language.

LLMs typically refer to language models containing tens of billions (or more) of parameters that are trained on massive amounts of text data to gain a deep understanding of language. At present, well-known foreign LLMs include GPT-3.5, GPT-4, PaLM, Claude and LLaMA, etc., and domestic ones include Wenxinyiyan, iFlytek Spark, Tongyi Qianwen, ChatGLM, Baichuan, etc.

In order to explore the limits of performance, many researchers began to train increasingly large language models, such as GPT-3 with 175 billion parameters and PaLM with 540 billion parameters. Although these large language models use similar architectures and pre-training tasks to small language models such as BERT with 330 million parameters and GPT-2 with `1.5 billion parameters, they exhibit very different capabilities , especially showing amazing potential when solving complex tasks, which is called “emergent capability”. Taking GPT-3 and GPT-2 as examples, GPT-3 can solve few-shot tasks by learning context, while GPT-2 performs worse in this regard. Therefore, the scientific research community gave these huge language models a name, calling them “Large Language Models (LLM)”. An outstanding application of LLM is ChatGPT, which is a bold attempt of the GPT series LLM for conversational applications with humans, showing very smooth and natural performance.

The development history of LLM

Research on language modeling can be traced back to the 1990s. Research at that time mainly focused on using statistical learning methods to predict words and predict the next word by analyzing previous words. However, there are certain limitations in understanding complex language rules.

Subsequently, researchers continued to try to improve. In 2003, Bengio, a pioneer of deep learning, integrated the idea of deep learning into a language model for the first time in his classic paper “A Neural Probabilistic Language Model”. The powerful neural network model is equivalent to providing a powerful “brain” for the computer to understand language, allowing the model to better capture and understand the complex relationships in language.

Around 2018, the Transformer architecture neural network model began to emerge. These models are trained through large amounts of text data, enabling them to deeply understand language rules and patterns by reading large amounts of text, just like letting computers read the entire Internet. They have a deeper understanding of language, which greatly improves the model’s performance in various natural Performance on language processing tasks.

At the same time, researchers have found that as the scale of language models increases (either increasing the model size or using more data), the models exhibit some amazing capabilities and their performance on various tasks is significantly improved. This discovery marks the beginning of the era of large language models (LLMs).

LLM capabilities

Emergent abilities

One of the most striking features that distinguish large language models (LLMs) from previous pre-trained language models (PLMs) is their emergent capability. Emergent capability is a surprising ability that is not evident in small models but is particularly prominent in large models. Similar to the phase change phenomenon in physics, emergence capability is like the rapid improvement of model performance as the scale increases, exceeding the random level, which is what we often call quantitative change causing qualitative change.

Emergent abilities can be related to certain complex tasks, but we are more concerned with their general abilities. Next, we briefly introduce three typical emergent capabilities of LLM:

- Contextual Learning: Contextual learning capability was first introduced by GPT-3. This capability allows language models, given natural language instructions or multiple task examples, to perform tasks by understanding the context and generating appropriate output without the need for additional training or parameter updates.

- Instruction following: Fine-tuning by using multi-task data described in natural language, which is the so-called

instruction fine-tuning. LLM is shown to perform well on unseen tasks formally described using instructions. This means that LLM is able to perform tasks based on task instructions without having to see specific examples beforehand, demonstrating its strong generalization capabilities. - Step-by-step reasoning: Small language models often struggle to solve complex tasks involving multiple reasoning steps, such as mathematical problems. However, LLM solves these tasks by employing a CoT (Chain of Thought) reasoning strategy, using a prompt mechanism that includes intermediate reasoning steps to arrive at the final answer. Presumably, this ability may be acquired through training in the code.

The ability to support multiple applications as a base model

In 2021, researchers from Stanford University and other universities proposed the concept of foundation model, clarifying the role of pre-training models. This is a new AI technology paradigm that relies on the training of massive unlabeled data to obtain large models (single-modal or multi-modal) that can be applied to a large number of downstream tasks. In this way, multiple applications can be constructed uniformly relying on only one or a few large models.

Large language models are a typical example of this new model. Using unified large models can greatly improve R&D efficiency. This is a substantial improvement over developing a single model at a time. Large models can not only shorten the development cycle of each specific application and reduce the required manpower investment, but also achieve better application results based on the reasoning, common sense and writing skills of large models. Therefore, the large model can become a unified base model for AI application development. This is a new paradigm that serves multiple purposes and deserves to be vigorously promoted.

General Artificial Intelligence

Over the decades, artificial intelligence researchers have achieved several milestones that have significantly advanced the development of machine intelligence, even to the point of imitating human intelligence in specific tasks. For example, AI summarizers use machine learning (ML) models to extract key points from documents and generate easy-to-understand summaries. AI is therefore a computer science discipline that enables software to solve novel and difficult tasks with human-level performance.

In contrast, AGI systems can solve problems in various fields just like humans, without human intervention. AGI is not limited to a specific scope, but can teach itself and solve problems for which it has never been trained. Therefore, AGI is a theoretical manifestation of complete artificial intelligence that solves complex tasks with broad human cognitive capabilities.

Some computer scientists believe that an AGI is a hypothetical computer program capable of human understanding and cognition. AI systems can learn to handle unfamiliar tasks without requiring additional training in such theories. In other words, the AI systems we use today require a lot of training to handle related tasks in the same domain. For example, you have to fine-tune a pretrained large language model (LLM) using a medical dataset before it can run consistently as a medical chatbot.

Strong AI is complete artificial intelligence, or AGI, that is capable of performing tasks at the level of human cognition despite having little background knowledge. Science fiction often depicts strong AI as thinking machines capable of human understanding, regardless of domain limitations.

In contrast, weak or narrow AI are AI systems that are limited to computational specifications, algorithms, and the specific tasks they are designed for. For example, previous AI models had limited memory and could only rely on real-time data to make decisions. Even emerging generative AI applications with higher memory retention rates are considered weak AI because they cannot be reused in other areas.

Introduction to RAG

Question: Today’s leading large language models (LLMs) are trained on large amounts of data in order to enable them to master a wide range of general knowledge, which is [toc]

Build RAG application

LLM access langchain

LangChain provides an efficient development framework for developing custom applications based on LLM, allowing developers to quickly activate the powerful capabilities of LLM and build LLM applications. LangChain also supports a variety of large models and has built-in calling interfaces for large models such as OpenAI and LLAMA. However, LangChain does not have all large models built-in. It provides strong scalability by allowing users to customize LLM types.

Use LangChain to call ChatGPT

LangChain provides encapsulation of a variety of large models. The interface based on LangChain can easily call ChatGPT and integrate it into personal applications built with LangChain as the basic framework. Here we briefly describe how to use the LangChain interface to call ChatGPT.

Integrating ChatGPT into LangChain’s framework allows developers to leverage its advanced generation capabilities to power their applications. Below, we will introduce how to call ChatGPT through the LangChain interface and configure the necessary personal keys.

1. Get API Key

Before you can call ChatGPT through LangChain, you need to obtain an API key from OpenAI. This key will be used to authenticate requests, ensuring that your application can communicate securely with OpenAI’s servers. The steps to obtain a key typically include:

- Register or log in to OpenAI’s website.

- Enter the API management page.

- Create a new API key or use an existing one.

- Copy this key, you will use it when configuring LangChain.

2. Configure the key in LangChain

Once you have your API key, the next step is to configure it in LangChain. This usually involves adding the key to your environment variables or configuration files. Doing this ensures that your keys are not hard-coded in your application code, improving security.

For example, you can add the following configuration in the .env file:

OPENAI_API_KEY=Your API key

Make sure this file is not included in the version control system to avoid leaking the key.

3. Use LangChain interface to call ChatGPT

The LangChain framework usually provides a simple API for calling different large models. The following is a Python-based example showing how to use LangChain to call ChatGPT for text generation:

from langchain.chains import OpenAIChain

# Initialize LangChain’s ChatGPT interface

chatgpt = OpenAIChain(api_key="your API key")

# Generate replies using ChatGPT

response = chatgpt.complete(prompt="Hello, world! How can I help you today?")

print(response)

In this example, the OpenAIChain class is a wrapper provided by LangChain that leverages your API key to handle authentication and calls to ChatGPT.

Model

Import OpenAI’s conversation model ChatOpenAI from langchain.chat_models. In addition to OpenAI, langchain.chat_models also integrates other conversation models. For more details, please view Langchain official documentation.

import os

import openai

from dotenv import load_dotenv, find_dotenv

# Read local/project environment variables.

# find_dotenv() finds and locates the path of the .env file

# load_dotenv() reads the .env file and loads the environment variables in it into the current running environment

# If you set a global environment variable, this line of code has no effect.

_ = load_dotenv(find_dotenv())

# Get the environment variable OPENAI_API_KEY

openai_api_key = os.environ['OPENAI_API_KEY']

If langchain-openai is not installed, please run the following code first!

from langchain_openai import ChatOpenAI

Next, you need to instantiate a ChatOpenAI class. You can pass in hyperparameters to control the answer when instantiating, such as the temperature parameter.

# Here we set the parameter temperature to 0.0 to reduce the randomness of generated answers.

# If you want to get different and innovative answers every time, you can try adjusting this parameter.

llm = ChatOpenAI(temperature=0.0)

llm

ChatOpenAI(client=<openai.resources.chat.completions.Completions object at 0x000001B17F799BD0>, async_client=<openai.resources.chat.completions.AsyncCompletions object at 0x000001B17F79BA60>, temperature=0.0, openai_api_key=SecretStr('***** *****'), openai_api_base='https://api.chatgptid.net/v1', openai_proxy='')

The cell above assumes that your OpenAI API key is set in an environment variable, if you wish to specify the API key manually, use the following code:

llm = ChatOpenAI(temperature=0, openai_api_key="YOUR_API_KEY")

As you can see, the ChatGPT-3.5 model is called by default. In addition, several commonly used hyperparameter settings include:

model_name: The model to be used, the default is ‘gpt-3.5-turbo’, the parameter settings are consistent with the OpenAI native interface parameter settings.temperature: temperature coefficient, the value is the same as the native interface.openai_api_key: OpenAI API key. If you do not use environment variables to set the API Key, you can also set it during instantiation.openai_proxy: Set the proxy. If you do not use environment variables to set the proxy, you can also set it during instantiation.streaming: Whether to use streaming, that is, output the model answer verbatim. The default is False, which will not be described here.max_tokens: The maximum number of tokens output by the model. The meaning and value are the same as above.

Once we’ve initialized the LLM of your choice, we can try using it! Let’s ask “Please introduce yourself!”

output = llm.invoke("Please introduce yourself!")

//output

// AIMessage(content='Hello, I am an intelligent assistant that focuses on providing users with various services and help. I can answer questions, provide information, solve problems, and help users complete their work and life more efficiently. If you If you have any questions or need help, please feel free to let me know and I will try my best to help you. ', response_metadata={'token_usage': {'completion_tokens': 104, 'prompt_tokens': 20, 'total_tokens': 124 }, 'model_name': 'gpt-3.5-turbo', 'system_fingerprint': 'fp_b28b39ffa8', 'finish_reason': 'stop', 'logprobs': None})

Prompt (prompt template)

When we develop large model applications, in most cases the user’s input is not passed directly to the LLM. Typically, they add user input to a larger text called a prompt template that provides additional context about the specific task at hand. PromptTemplates As you can see from the above results, we successfully parsed the ChatMessage type output into a string through the output parser to help solve this problem! They bundle all logic from user input to fully formatted prompts. This can be started very simply - for example, the tip for generating the string above is:

We need to construct a personalized Template first:

from langchain_core.prompts import ChatPromptTemplate

#Here we ask the model to translate the given text into Chinese

prompt = """Please translate the text separated by three backticks into English!\

text: ```{text}```

"""

'I carried luggage heavier than my body and dived into the bottom of the Nile River. After passing through several flashes of lightning, I saw a pile of halos, not sure if this is the place.'From the above results, we can see that we successfully parsed the output of type

ChatMessageintostringthrough the output parser.

Complete process

We can now combine all of this into a chain. This chain will take the input variables, pass those variables to the prompt template to create the prompt, pass the prompt to the language model, and then pass the output through the (optional) output parser. Next we will use the LCEL syntax to quickly implement a chain. Let’s see it in action!

chain = chat_prompt | llm | output_parser

chain.invoke({"input_language":"Chinese", "output_language":"English","text": text})

'I carried luggage heavier than my body and dived into the bottom of the Nile River. After passing through several flashes of lightning, I saw a pile of halos, not sure if this is the place.'

Let’s test another example:

text = 'I carried luggage heavier than my body and dived into the bottom of the Nile River. After passing through several flashes of lightning, I saw a pile of halos, not sure if this is the place.'

chain.invoke({"input_language":"English", "output_language":"Chinese","text": text})

'I dived to the bottom of the Nile carrying luggage heavier than my body. After passing through a few bolts of lightning, I saw a bunch of rings and wasn't sure if this was the destination. '

What is LCEL? LCEL (LangChain Expression Language, Langchain’s expression language), LCEL is a new syntax and an important addition to the LangChain toolkit. It has many advantages, making it easier and more convenient for us to deal with LangChain and agents.

- LCEL provides asynchronous, batch and stream processing support so that code can be quickly ported across different servers.

- LCEL has backup measures to solve the problem of LLM format output.

- LCEL increases the parallelism of LLM and improves efficiency.

- LCEL has built-in logging, which helps to understand the operation of complex chains and agents even if the agent becomes complex.

Usage examples:

chain = prompt | model | output_parser

In the code above we use LCEL to piece together the different components into a chain where user input is passed to the prompt template, then the prompt template output is passed to the model, and then the model output is passed to the output parser. The notation of | is similar to the Unix pipe operator, which links different components together, using the output of one component as the input of the next component.

API calls

The call to ChatGpt we introduced above is actually similar to the call to other large language model APIs. Using the LangChain API means that you are sending a request to the remote server through the Internet, and the preconfigured model is running on the server. This is usually a centralized solution that is hosted and maintained by a service provider.

In this demo, we will call a simple text analysis API, such as the Sentiment Analysis API, to analyze the sentiment of text. Suppose we use an open API service, such as text-processing.com.

step:

- Register and get an API key (if required).

- Write code to send HTTP requests.

- Present and interpret the returned results.

Python code example:

import requests

def analyze_sentiment(text):

url = "http://text-processing.com/api/sentiment/"

payload = {'text': text}

response = requests.post(url, data=payload)

return response.json()

# Sample text

text = "I love coding with Python!"

result = analyze_sentiment(text)

print("Sentiment Analysis Result:", result)

In this example, we do this by sending a POST request to the sentiment analysis interface of text-processing.com and printing out the results. This demonstrates how to leverage the computing resources of a remote server to perform tasks.

Local model calling demonstration

In this demo, we will use a library in Python (such as TextBlob) that allows us to perform text sentiment analysis locally without any external API calls.

step:

- Install the necessary libraries (for example,

TextBlob). - Write code to analyze the text.

- Present and interpret results.

Python code example:

from textblob import TextBlob

def local_sentiment_analysis(text):

blob = TextBlob(text)

return blob.sentiment

# Sample text

text = "I love coding with Python!"

result = local_sentiment_analysis(text)

print("Local Sentiment Analysis Result:", result)

In this example, we perform sentiment analysis of text directly on the local computer through the TextBlob library. This approach shows how to process data and tasks in a local environment without relying on external services.

Build a search question and answer chain

Load vector database

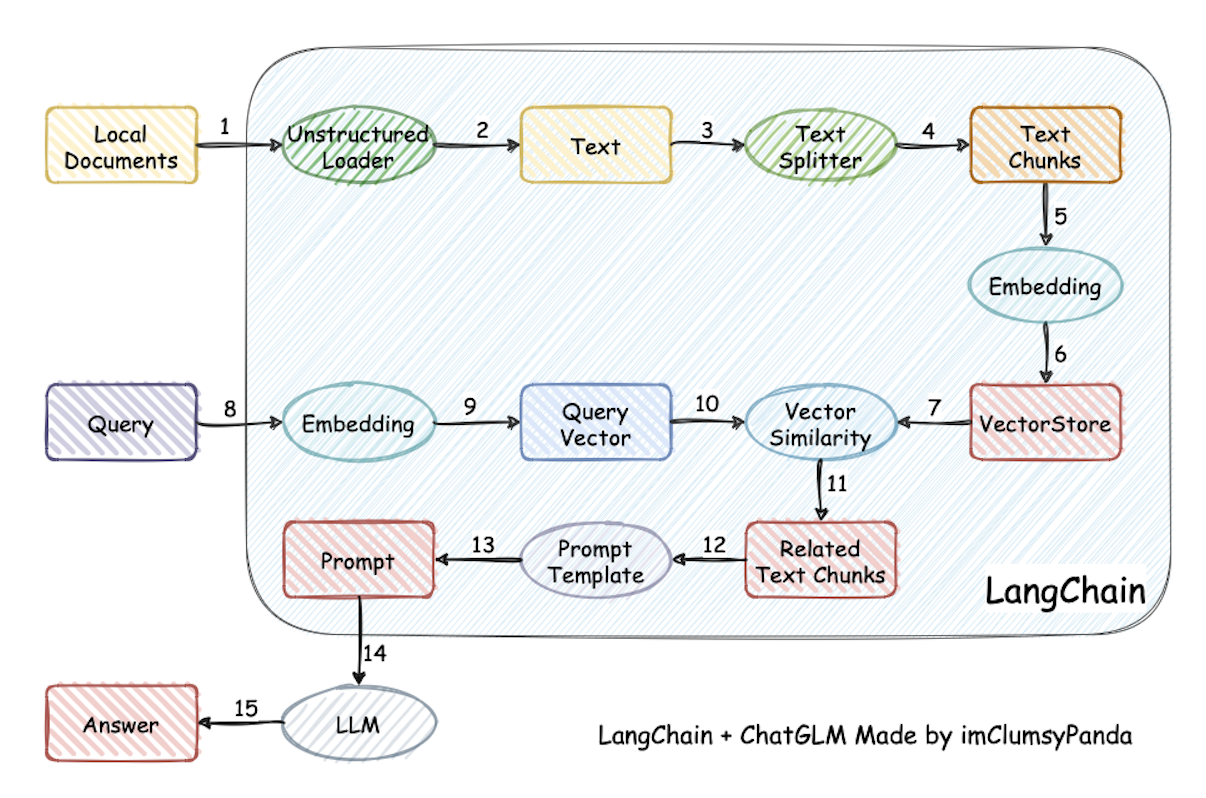

First, we will load the vector database we built in the previous chapter. Make sure to use the same embedding model that you used to build the vector database.

importsys

sys.path.append("../C3 Build Knowledge Base") # Add the parent directory to the system path

from zhipuai_embedding import ZhipuAIEmbeddings # Use Zhipu Embedding API

from langchain.vectorstores.chroma import Chroma # Load Chroma vector store

# Load your API_KEY from environment variables

from dotenv import load_dotodotenv, find_dotenv

import os

_ = load_dotenv(find_dotenv()) # Read local .env file

zhipuai_api_key = os.environ['ZHIPUAI_API_KEY']

#define Embedding examples

embedding = ZhipuAIEmbeddings()

# Vector database persistence path

persist_directory = '../C3 build knowledge base/data_base/vector_db/chroma'

#Initialize vector database

vectordb = Chroma(

persist_directory=persist_directory,

embedding_function=embedding

)

print(f"The number stored in the vector library: {vectordb._collection.count()}")

Number stored in vector library: 20

We can test the loaded vector database and use a query to perform vector retrieval. The following code will search based on similarity in the vector database and return the top k most similar documents.

⚠️Before using similarity search, please make sure you have installed the OpenAI open source fast word segmentation tool tiktoken package:

pip install tiktoken

question = "What is prompt engineering?"

docs = vectordb.similarity_search(question,k=3)

print(f"Number of retrieved contents: {len(docs)}")

Number of items retrieved: 3Print the retrieved content

``py for i, doc in enumerate(docs): print(f"The {i}th content retrieved: \n {doc.page_content}", end="\n——————— ——————————–\n")

Test vector database

Use the following code to test a loaded vector database, retrieving documents similar to the query question.

# Install necessary word segmentation tools

# ⚠️Please make sure you have installed OpenAI’s tiktoken package: pip install tiktoken

question = "What is prompt engineering?"

docs = vectordb.similarity_search(question, k=3)

print(f"Number of retrieved contents: {len(docs)}")

#Print the retrieved content

for i, doc in enumerate(docs):

print(f"The {i}th content retrieved: \n{doc.page_content}")

print("------------------------------------------------- ------")

Create an LLM instance

Here, we will call OpenAI’s API to create a language model instance.

import os

OPENAI_API_KEY = os.environ["OPENAI_API_KEY"]

from langchain_openai import ChatOpenAI

llm = ChatOpenAI(model_name="gpt-3.5-turbo", temperature=0)

response = llm.invoke("Please introduce yourself!")

print(response.content)

Added some interesting methods for creating LLM instances:

1. Use third-party API services (such as OpenAI’s API)

OpenAI provides a variety of pre-trained large language models (such as GPT-3 or ChatGPT) that can be called directly through its API. The advantage of this method is that it is simple to operate and does not require you to manage the training and deployment of the model yourself, but it does require paying fees and relying on external network services.

import openai # Set API key openai.api_key = 'Your API key' # Create a language model instance response = openai.Completion.create( engine="text-davinci-002", prompt="Please enter your question", max_tokens=50 ) print(response.choices[0].text.strip())**2. Use machine learning frameworks (such as Hugging Face Transformers) **

If you want more control, or need to run the model locally, you can use Hugging Face’s Transformers library. This library provides a wide range of pretrained language models that you can easily download and run locally.

from transformers import pipeline # Load model and tokenizer generator = pipeline('text-generation', model='gpt2') # Generate text response = generator("Please enter your question", max_length=100, num_return_sequences=1) print(response[0]['generated_text'])3. Autonomous training model

For advanced users with specific needs, you can train a language model yourself. This often requires large amounts of data and computing resources. You can use a deep learning framework like PyTorch or TensorFlow to train a model from scratch or fine-tune an existing pre-trained model.

import torch from transformers import GPT2Model, GPT2Config # Initialize model configuration configuration = GPT2Config() #Create model instance model = GPT2Model(configuration) # The model can be further trained or fine-tuned as needed

Build a search question and answer chain

By combining vector retrieval with answer generation from language models, an effective retrieval question-answering chain is constructed.

from langchain.prompts import PromptTemplate

from langchain.chains import RetrievalQA

template = """Use the following context to answer the question. If you don't know the answer, just say you don't know. The answer should be concise and to the point, adding "Thank you for asking!" at the end! ".

{context}

Question: {question}

"""

QA_CHAIN_PROMPT = PromptTemplate(input_variables=["context", "question"], template=template)

qa_chain = RetrievalQA.from_chain_type(llm, retriever=vectordb.as_retriever(), return_source_documents=True, chain_type_kwargs={"prompt": QA_CHAIN_PROMPT})

# Test retrieval question and answer chain

question_1 = "What is a pumpkin book?"

result = qa_chain({"query": question_1})

print(f"Retrieve Q&A results: {result['result']}")

In this way, we optimize the structure of the code and the clarity of the text, ensuring functional integration and readability. At the same time, we have also strengthened the comments of the code to help understand the role of each step and the necessary installation tips.

Create a method to retrieve the QA chain RetrievalQA.from_chain_type() with the following parameters:

- llm: Specify the LLM used

- Specify chain type: RetrievalQA.from_chain_type(chain_type=“map_reduce”), you can also use the load_qa_chain() method to specify the chain type.

- Customized prompt: By specifying the chain_type_kwargs parameter in the RetrievalQA.from_chain_type() method, and this parameter: chain_type_kwargs = {“prompt”: PROMPT}

- Return to the source document: Specify the return_source_documents=True parameter in the RetrievalQA.from_chain_type() method; you can also use the RetrievalQAWithSourceChain() method to return the reference of the source document (coordinates or primary key, index)

Retrieval question and answer chain effect test

Once the search question and answer chain is constructed, the next step is to test its effectiveness. We can evaluate its performance by asking some sample questions.

# Define test questions

questions = ["What is the Pumpkin Book?", "Who is Wang Yangming?"]

# Traverse the questions and use the search question and answer chain to get the answers

for question in questions:

result = qa_chain({"query": question})

print(f"Question: {question}\nAnswer: {result['result']}\n")

This test helps us understand how the model performs in real-world applications, and how efficient and accurate it is at handling specific types of problems.

Prompt effect built based on recall results and query

navigation:

result = qa_chain({"query": question_1})

print("Results of answering question_1 after large model + knowledge base:")

print(result["result"])

test:

d:\Miniconda\miniconda3\envs\llm2\lib\site-packages\langchain_core\_api\deprecation.py:117: LangChainDeprecationWarning: The function `__call__` was deprecated in LangChain 0.1.0 and will be removed in 0.2.0. Use invoke instead.

warn_deprecated(

The result of answering question_1 after large model + knowledge base:

Sorry, I don't know what a pumpkin book is. Thank you for your question!

Output result:

result = qa_chain({"query": question_2})

print("Results of answering question_2 after large model + knowledge base:")

print(result["result"])

The results of answering question_2 after large model + knowledge base: I don't know who Wang Yangming is. Thank you for your question!

The results of the big model’s own answer

prompt_template = """Please answer the following questions:

{}""".format(question_1)

### Q&A based on large models

llm.predict(prompt_template)

d:\Miniconda\miniconda3\envs\llm2\lib\site-packages\langchain_core\_api\deprecation.py:117: LangChainDeprecationWarning: The function `predict` was deprecated in LangChain 0.1.7 and will be removed in 0.2.0. Use invoke instead. warn_deprecated( 'Pumpkin book refers to a kind of book about pumpkins, usually books that introduce knowledge about pumpkin planting, maintenance, cooking and other aspects. A pumpkin book can also refer to a literary work with pumpkins as the theme. '

⭐ Through the above two questions, we found that LLM did not answer very well for some knowledge in recent years and non-common knowledge professional questions. And that, coupled with our local knowledge, can help LLM come up with better answers. In addition, it also helps alleviate the “illusion” problem of large models.

Add memory function for historical conversations

In scenarios of continuous interaction with users, it is very important to maintain the continuity of the conversation.

Now we have realized that by uploading local knowledge documents, and then saving them to the vector knowledge base, by combining the query questions with the recall results of the vector knowledge base and inputting them into LLM, we will get a better answer than directly letting LLM answer Much better results. When interacting with language models, you may have noticed a key problem - they don’t remember your previous communications. This creates a big challenge when we build some applications, such as chatbots, where the conversation seems to lack real continuity. How to solve this problem?

The memory function can help the model “remember” the previous conversation content, so that it can be more accurate and personalized when answering questions.

from langchain.memory import ConversationBufferMemory

# Initialize memory storage

memory = ConversationBufferMemory(

memory_key="chat_history", # Be consistent with the input variable of prompt

return_messages=True # Return a list of messages instead of a single string

)

#Create a conversation retrieval chain

from langchain.chains import ConversationalRetrievalChain

conversational_qa = ConversationalRetrievalChain.from_llm(

llm,

retriever=vectordb.as_retriever(),

memory=memory

)

# Test memory function

initial_question = "Will you learn Python in this course?"

follow_up_question = "Why does this course need to teach this knowledge?"

# Ask questions and record answers

initial_answer = conversational_qa({"question": initial_question})

print(f"Question: {initial_question}\nAnswer: {initial_answer['answer']}")

#Ask follow up questions

follow_up_answer = conversational_qa({"question": follow_up_question})

print(f"Follow question: {follow_up_question}\nAnswer: {follow_up_answer['answer']}")

In this way, we not only enhance the coherence of the Q&A system, but also make the conversation more natural and useful. This memory function is particularly suitable for customer service robots, educational coaching applications, and any scenario that requires long-term interaction.

Dialogue retrieval chain:

The ConversationalRetrievalChain adds the ability to process conversation history on the basis of retrieving the QA chain.

Its workflow is:

- Combine previous conversations with new questions to generate a complete query.

- Search the vector database for relevant documents for the query.

- After obtaining the results, store all answers into the dialogue memory area.

- Users can view the complete conversation process in the UI.

This chaining approach places new questions in the context of previous conversations and can handle queries that rely on historical information. And keep all information in conversation memory for easy tracking.

Next let us test the effect of this dialogue retrieval chain:

Use the vector database and LLM from the previous section! Start by asking a conversation-free question “Will this class teach you Python?” and see the answers.

from langchain.chains import ConversationalRetrievalChain

retriever=vectordb.as_retriever()

qa = ConversationalRetrievalChain.from_llm(

llm,

retriever=retriever,

memory=memory

)

question = "Can I learn about prompt engineering?"

result = qa({"question": question})

print(result['answer'])

Yes, you can learn about prompt engineering. The content of this module is based on the "Prompt Engineering for Developer" course taught by Andrew Ng. It aims to share the best practices and techniques for using prompt words to develop large language model applications. The course will introduce principles for designing efficient prompts, including writing clear, specific instructions and giving the model ample time to think. By learning these topics, you can better leverage the performance of large language models and build great language model applications.

Then based on the answer, proceed to the next question “Why does this course need to teach this knowledge?":

question = "Why does this course need to teach this knowledge?"

result = qa({"question": question})

print(result['answer'])

This course teaches knowledge about Prompt Engineering, mainly to help developers better use large language models (LLM) to complete various tasks. By learning Prompt Engineering, developers can learn how to design clear prompt words to guide the language model to generate text output that meets expectations. This skill is important for developing applications and solutions based on large language models, improving the efficiency and accuracy of the models.It can be seen that LLM accurately determines this knowledge and refers to the knowledge of reinforcement learning, that is, we successfully passed historical information to it. This ability to continuously learn and correlate previous and subsequent questions can greatly enhance the continuity and intelligence of the question and answer system.

Deploy knowledge base assistant

Now that we have a basic understanding of knowledge bases and LLM, it’s time to combine them neatly and create a visually rich interface. Such an interface is not only easier to operate, but also easier to share with others.

Streamlit is a fast and convenient way to demonstrate machine learning models directly in Python through a friendly web interface. In this course, we’ll learn how to use it to build user interfaces for generative AI applications. After building a machine learning model, if you want to build a demo to show others, maybe to get feedback and drive improvements to the system, or just because you think the system is cool and want to demonstrate it: Streamlit allows you to The Python interface program quickly achieves this goal without writing any front-end, web or JavaScript code.

Learn https://github.com/streamlit/streamlit open source project

Official documentation: https://docs.streamlit.io/get-started

The faster way to build and share data applications.

Streamlit is an open source Python library for quickly creating data applications. It is designed to allow data scientists to easily transform data analysis and machine learning models into interactive web applications without requiring in-depth knowledge of web development. The difference from regular web frameworks, such as Flask/Django, is that it does not require you to write any client code (HTML/CSS/JS). You only need to write ordinary Python modules, which can be created in a short time. The beautiful and highly interactive interface allows you to quickly generate data analysis or machine learning results; on the other hand, unlike tools that can only be generated by dragging and dropping, you still have complete control over the code.

Streamlit provides a simple yet powerful set of basic modules for building data applications:

st.write(): This is one of the most basic modules used to render text, images, tables, etc. in the application.

st.title(), st.header(), st.subheader(): These modules are used to add titles, subtitles, and grouped titles to organize the layout of the application.

st.text(), st.markdown(): used to add text content and support Markdown syntax.

st.image(): used to add images to the application.

st.dataframe(): used to render Pandas data frame.

st.table(): used to render simple data tables.

st.pyplot(), st.altair_chart(), st.plotly_chart(): used to render charts drawn by Matplotlib, Altair or Plotly.

st.selectbox(), st.multiselect(), st.slider(), st.text_input(): used to add interactive widgets that allow users to select, enter, or slide in the application.

st.button(), st.checkbox(), st.radio(): used to add buttons, checkboxes, and radio buttons to trigger specific actions.

PMF: Streamli solves the problem for developers who need to quickly create and deploy data-driven applications, especially researchers and engineers who want to still be able to showcase their data analysis or machine learning models without deep learning of front-end technologies.

Streamlit lets you turn Python scripts into interactive web applications in minutes, not weeks. Build dashboards, generate reports, or create chat applications. After you create your application, you can use our community cloud platform to deploy, manage and share your application.

Why choose Streamlit?

- Simple and Pythonic: Write beautiful, easy-to-read code.

- Rapid, interactive prototyping: Let others interact with your data and provide feedback quickly.

- Live editing: See application updates immediately while editing scripts.

- Open source and free: Join the vibrant community and contribute to the future of Streamlit.

Build the application

First, create a new Python file and save it streamlit_app.py in the root of your working directory

- Import the necessary Python libraries.

import streamlit as st

from langchain_openai import ChatOpenAI

- Create the title of the application

st.title

st.title('🦜🔗 Hands-on learning of large model application development')

- Add a text input box for users to enter their OpenAI API key

openai_api_key = st.sidebar.text_input('OpenAI API Key', type='password')

- Define a function to authenticate to the OpenAI API using a user key, send a prompt, and get an AI-generated response. This function accepts the user’s prompt as a parameter and uses

st.infoto display the AI-generated response in a blue box

def generate_response(input_text):

llm = ChatOpenAI(temperature=0.7, openai_api_key=openai_api_key)

st.info(llm(input_text))

- Finally, use

st.form()to create a text box (st.text_area()) for user input. When the user clicksSubmit,generate-response()will call the function with the user’s input as argument

with st.form('my_form'):

text = st.text_area('Enter text:', 'What are the three key pieces of advice for learning how to code?')

submitted = st.form_submit_button('Submit')

if not openai_api_key.startswith('sk-'):

st.warning('Please enter your OpenAI API key!', icon='⚠')

if submitted and openai_api_key.startswith('sk-'):

generate_response(text)

- Save the current file

streamlit_app.py! - Return to your computer’s terminal to run the application

streamlit run streamlit_app.py

However, currently only a single round of dialogue can be performed. We have made some modifications to the above. By using st.session_state to store the conversation history, the context of the entire conversation can be retained when the user interacts with the application. The specific code is as follows:

# Streamlit API

def main():

st.title('🦜🔗 Hands-on learning of large model application development')

openai_api_key = st.sidebar.text_input('OpenAI API Key', type='password')

# Used to track conversation history

if 'messages' not in st.session_state:

st.session_state.messages = []

messages = st.container(height=300)

if prompt := st.chat_input("Say something"):

# Add user input to the conversation history

st.session_state.messages.append({"role": "user", "text": prompt})

# Call the respond function to get the answer

answer = generate_response(prompt, openai_api_key)

# Check if the answer is None

if answer is not None:

# Add LLM's answer to the conversation history

st.session_state.messages.append({"role": "assistant", "text": answer})

# Show the entire conversation history

for message in st.session_state.messages:

if message["role"] == "user":

messages.chat_message("user").write(message["text"])

elif message["role"] == "assistant":

messages.chat_message("assistant").write(message["text"])

Add search questions and answers

First encapsulate the code in the 2. Build the retrieval question and answer chain part:

- The get_vectordb function returns the partially persisted vector knowledge base of C3

- The get_chat_qa_chain function returns the result of calling the retrieved question and answer chain with history

- The get_qa_chain function returns the result of calling the retrieved Q&A chain without history records

def get_vectordb():

# Definition Embeddings

embedding = ZhipuAIEmbeddings()

# Vector database persistence path

persist_directory = '../C3 build knowledge base/data_base/vector_db/chroma'

#Load database

vectordb = Chroma(

persist_directory=persist_directory, # Allows us to save the persist_directory directory to disk

embedding_function=embedding

)

return vectordb

#Q&A chain with history

def get_chat_qa_chain(question:str,openai_api_key:str):

vectordb = get_vectordb()

llm = ChatOpenAI(model_name = "gpt-3.5-turbo", temperature = 0,openai_api_key = openai_api_key)

memory = ConversationBufferMemory(

memory_key="chat_history", # Be consistent with the input variable of prompt.

return_messages=True # Will return the chat history as a list of messages instead of a single string

)

retriever=vectordb.as_retriever()

qa = ConversationalRetrievalChain.from_llm(

llm,

retriever=retriever,

memory=memory

)

result = qa({"question": question})

return result['answer']

#Q&A chain without history

def get_qa_chain(question:str,openai_api_key:str):

vectordb = get_vectordb()

llm = ChatOpenAI(model_name = "gpt-3.5-turbo", temperature = 0,openai_api_key = openai_api_key)

template = """Use the following context to answer the last question. If you don't know the answer, just say you don't know and don't try to make it up.

case. Use a maximum of three sentences. Try to keep your answers concise and to the point. Always say "Thank you for asking!" at the end of your answer.

{context}

Question: {question}

"""

QA_CHAIN_PROMPT = PromptTemplate(input_variables=["context","question"],

template=template)

qa_chain = RetrievalQA.from_chain_type(llm,

retriever=vectordb.as_retriever(),

return_source_documents=True,

chain_type_kwargs={"prompt":QA_CHAIN_PROMPT})

result = qa_chain({"query": question})

return result["result"]

Then, add a radio button widget st.radio to select the mode for Q&A:

- None: Do not use the normal mode of retrieving questions and answers

- qa_chain: Search question and answer mode without history records

- chat_qa_chain: Retrieval question and answer mode with history records

selected_method = st.radio(

"Which mode do you want to choose for the conversation?",

["None", "qa_chain", "chat_qa_chain"],

captions = ["Normal mode without search Q&A", "Search Q&A mode without history", "Search Q&A mode with history"])

Enter the page, first enter OPEN_API_KEY (default), then click the radio button to select the Q&A mode, and finally enter your question in the input box and press Enter!

Deploy the application

To deploy your application to Streamlit Cloud, follow these steps:

- Create a GitHub repository for the application. Your repository should contain two files:

your-repository/

├── streamlit_app.py

└── requirements.txt

Go to Streamlit Community Cloud, click the

New appbutton in the workspace, and specify the repository, branch and master file path. Alternatively, you can customize your application’s URL by selecting a custom subdomainClick the

Deploy!button

Your application will now be deployed to the Streamlit Community Cloud and accessible from anywhere in the world! 🌎

Optimization direction:

- Added the function of uploading local documents and establishing vector database in the interface

- Added buttons for multiple LLM and embedding method selections

- Add button to modify parameters

- More……

Evaluate and optimize the generated part

We talked about how to evaluate the overall performance of a large model application based on the RAG framework. By constructing a verification set in a targeted manner, a variety of methods can be used to evaluate system performance from multiple dimensions. However, the purpose of the evaluation is to better optimize the application effect. To optimize the application performance, we need to combine the evaluation results, split the evaluated Bad Case (bad case), and evaluate each part separately. optimization.

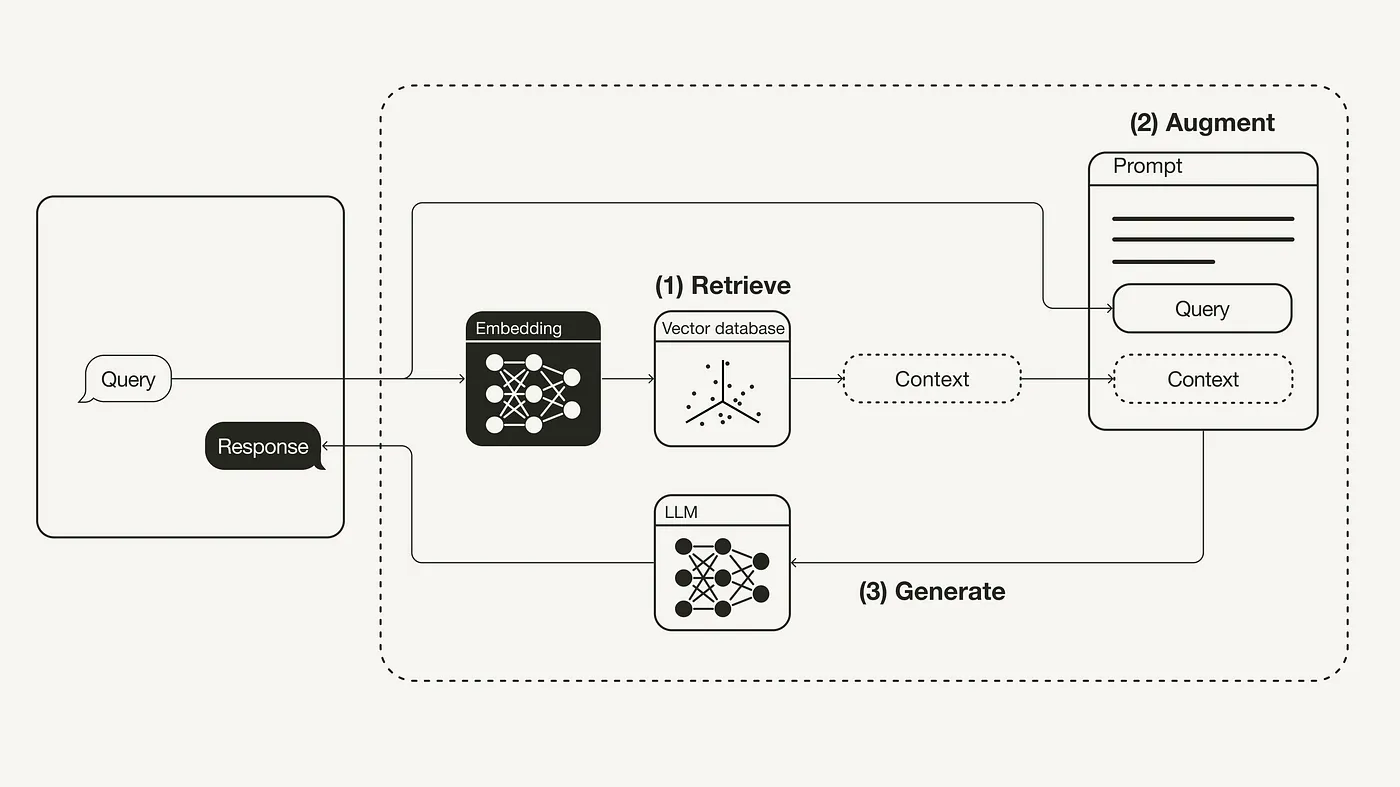

RAG stands for Retrieval Enhanced Generation, so it has two core parts: the retrieval part and the generation part. The core function of the retrieval part is to ensure that the system can find the corresponding answer fragment according to the user query, and the core function of the generation part is to ensure that after the system obtains the correct answer fragment, it can fully utilize the large model capabilities to generate an answer that meets the user’s requirements. Correct answer.

To optimize a large model application, we often need to start from these two parts at the same time, evaluate the performance of the retrieval part and the optimization part respectively, find Bad Cases and optimize performance accordingly. As for the generation part specifically, when the large model base has been restricted for use, we often optimize the generated answers by optimizing Prompt Engineering. In this chapter, we will first combine the large model application example we just built - Personal Knowledge Base Assistant to explain to you how to evaluate the performance of the analysis and generation part, find out the Bad Case in a targeted manner, and optimize it by optimizing Prompt Engineering Generate part.

Before we officially start, we first load our vector database and search chain:

importsys

sys.path.append("../C3 build knowledge base") #Put the parent directory into the system path

# Use the Zhipu Embedding API. Note that the encapsulation code implemented in the previous chapter needs to be downloaded locally.

from zhipuai_embedding import ZhipuAIEmbeddings

from langchain.vectorstores.chroma import Chroma

from langchain_openai import ChatOpenAI

from dotenv import load_dotenv, find_dotenv

import os

_ = load_dotenv(find_dotenv()) # read local .env file

zhipuai_api_key = os.environ['ZHIPUAI_API_KEY']

OPENAI_API_KEY = os.environ["OPENAI_API_KEY"]

# Definition Embeddings

embedding = ZhipuAIEmbeddings()

# Vector database persistence path

persist_directory = '../../data_base/vector_db/chroma'

#Load database

vectordb = Chroma(

persist_directory=persist_directory, # Allows us to save the persist_directory directory to disk

embedding_function=embedding

)

# Use OpenAI GPT-3.5 model

llm = ChatOpenAI(model_name = "gpt-3.5-turbo", temperature = 0)

os.environ['HTTPS_PROXY'] = 'http://127.0.0.1:7890'

os.environ["HTTP_PROXY"] = 'http://127.0.0.1:7890'

We first use the initialized Prompt to create a template-based retrieval chain:

from langchain.prompts import PromptTemplate

from langchain.chains import RetrievalQA

template_v1 = """Use the following context to answer the last question. If you don't know the answer, just say you don't know and don't try to make it up.

case. Use a maximum of three sentences. Try to keep your answers concise and to the point. Always say "Thank you for asking!" at the end of your answer.

{context}

Question: {question}

"""

QA_CHAIN_PROMPT = PromptTemplate(input_variables=["context","question"],

template=template_v1)

qa_chain = RetrievalQA.from_chain_type(llm,

retriever=vectordb.as_retriever(),

return_source_documents=True,

chain_type_kwargs={"prompt":QA_CHAIN_PROMPT})

Test the effect first:

question = "What is a Pumpkin Book"

result = qa_chain({"query": question})

print(result["result"])

The Pumpkin Book is a book that analyzes the difficult-to-understand formulas in "Machine Learning" (Watermelon Book) and adds derivation details. The best way to use the Pumpkin Book is to use the Watermelon Book as the main line, and then refer to the Pumpkin Book when you encounter difficulties in derivation or incomprehensible formulas. Thank you for your question!

Improve the quality of intuitive answers

There are many ways to find Bad Cases. The most intuitive and simplest is to evaluate the quality of intuitive answers and determine where there are deficiencies based on the original data content. For example, we can construct the above test into a Bad Case:

Question: What is a Pumpkin Book?

Initial answer: The Pumpkin Book is a book that analyzes the difficult-to-understand formulas in "Machine Learning" (Watermelon Book) and adds derivation details. Thank you for your question!

There are shortcomings: the answer is too brief, and the answer needs to be more specific; thank you for your question, which feels rather rigid and can be removed.

We then modify the Prompt template in a targeted manner, adding requirements for specific answers, and removing the “Thank you for your question” part:

template_v2 = """Use the following context to answer the last question. If you don't know the answer, just say you don't know and don't try to make it up.

case. You should make your answer as detailed and specific as possible without going off topic. If the answer is relatively long, please segment it into paragraphs as appropriate to improve the reading experience of the answer.

{context}

Question: {question}

Useful answers:"""

QA_CHAIN_PROMPT = PromptTemplate(input_variables=["context","question"],

template=template_v2)

qa_chain = RetrievalQA.from_chain_type(llm,

retriever=vectordb.as_retriever(),

return_source_documents=True,

chain_type_kwargs={"prompt":QA_CHAIN_PROMPT})

question = "What is a Pumpkin Book"

result = qa_chain({"query": question})

print(result["result"])

The Pumpkin Book is a supplementary analysis book for Teacher Zhou Zhihua’s "Machine Learning" (Watermelon Book). It aims to analyze the more difficult to understand formulas in Xigua's book and add specific derivation details to help readers better understand the knowledge in the field of machine learning. The content of the Pumpkin Book is expressed using the Watermelon Book as prerequisite knowledge. The best way to use it is to refer to it when you encounter a formula that you cannot derive or understand. The writing team of Pumpkin Book is committed to helping readers become qualified "sophomore students with a solid foundation in science, engineering and mathematics", and provides an online reading address and the address for obtaining the latest PDF version for readers to use.

It can be seen that the improved v2 version can give more specific and detailed answers, solving the previous problems. But we can think further and ask the model to give specific and detailed answers. Will it lead to unfocused and vague answers to some key points? We test the following questions:

question = "What are the principles for constructing Prompt when using large models?"

result = qa_chain({"query": question})

print(result["result"])

When using a large language model, the principles of constructing Prompt mainly include writing clear and specific instructions and giving the model sufficient time to think. First, Prompt needs to clearly express the requirements and provide sufficient contextual information to ensure that the language model accurately understands the user's intention. It's like explaining things to an alien who knows nothing about the human world. It requires detailed and clear descriptions. A prompt that is too simple will make it difficult for the model to accurately grasp the task requirements. Secondly, it is also crucial to give the language model sufficient inference time. Similar to the time humans need to think when solving problems, models also need time to reason and generate accurate results. Hasty conclusions often lead to erroneous output. Therefore, when designing Prompt, the requirement for step-by-step reasoning should be added to allow the model enough time to think logically, thereby improving the accuracy and reliability of the results. By following these two principles, designing optimized prompts can help language models realize their full potential and complete complex reasoning and generation tasks. Mastering these Prompt design principles is an important step for developers to successfully apply language models. In practical applications, continuously optimizing and adjusting Prompt and gradually approaching the best form are key strategies for building efficient and reliable model interaction.

As you can see, in response to our questions about the LLM course, the model’s answer was indeed detailed and specific, and fully referenced the course content. However, the answer started with the words first, second, etc., and the overall answer was divided into 4 paragraphs, resulting in an answer that was not particularly focused and clear. , not easy to read. Therefore, we construct the following Bad Case:

Question: What are the principles for constructing Prompt when using large models?

Initial answer: slightly

Weaknesses: no focus, vagueness

For this Bad Case, we can improve Prompt and require it to mark answers with several points to make the answer clear and specific:

template_v3 = """Use the following context to answer the last question. If you don't know the answer, just say you don't know and don't try to make it up.

case. You should make your answer as detailed and specific as possible without going off topic. If the answer is relatively long, please segment it into paragraphs as appropriate to improve the reading experience of the answer.

If the answer has several points, you should answer them with point numbers to make the answer clear and specific.

{context}

Question: {question}

Useful answers:"""

QA_CHAIN_PROMPT = PromptTemplate(input_variables=["context","question"],

template=template_v3)

qa_chain = RetrievalQA.from_chain_type(llm,

retriever=vectordb.as_retriever(),

return_source_documents=True,

chain_type_kwargs={"prompt":QA_CHAIN_PROMPT})

question = "What are the principles for constructing Prompt when using large models?"

result = qa_chain({"query": question})

print(result["result"])

1. Writing clear and specific instructions is the first principle of constructing Prompt. Prompt needs to clearly express the requirements and provide sufficient context so that the language model can accurately understand the intention. Prompts that are too simple will make it difficult for the model to complete the task. 2. Giving the model sufficient time to think is the second principle in constructing Prompt. Language models take time to reason and solve complex problems, and conclusions drawn in a hurry may not be accurate. Therefore, Prompt should include requirements for step-by-step reasoning, allowing the model enough time to think and generate more accurate results. 3. When designing Prompt, specify the steps required to complete the task. By given a complex task and a series of steps to complete the task, it can help the model better understand the task requirements and improve the efficiency of task completion. 4. Iterative optimization is a common strategy for constructing Prompt. Through the process of continuous trying, analyzing results, and improving prompts, we gradually approach the optimal prompt form. Successful prompts are usually arrived at through multiple rounds of adjustments. 5. Adding table description is a way to optimize Prompt. Asking the model to extract information and organize it into a table, specifying the columns, table names, and format of the table can help the model better understand the task and generate expected results. In short, the principles for constructing Prompt include clear and specific instructions, giving the model enough time to think, specifying the steps required to complete the task, iterative optimization and adding table descriptions, etc. These principles can help developers design efficient and reliable prompts to maximize the potential of language models.force.

There are many ways to improve the quality of answers. The core is to think about the specific business, find out the unsatisfactory points in the initial answers, and make targeted improvements. I will not go into details here.

Indicate the source of knowledge to improve credibility

Due to the hallucination problem in large models, we sometimes suspect that model answers are not derived from existing knowledge base content. This is especially important for some scenarios where authenticity needs to be ensured, such as:

question = "What is the definition of reinforcement learning?"

result = qa_chain({"query": question})

print(result["result"])

Reinforcement learning is a machine learning method designed to allow an agent to learn how to make a series of good decisions through interaction with the environment. In reinforcement learning, an agent chooses an action based on the state of the environment and then adjusts its strategy based on feedback (rewards) from the environment to maximize long-term rewards. The goal of reinforcement learning is to make optimal decisions under uncertainty, similar to the process of letting a child learn to walk through trial and error. Reinforcement learning has a wide range of applications, including game play, robot control, traffic optimization and other fields. In reinforcement learning, there is constant interaction between the agent and the environment, and the agent adjusts its strategy based on feedback from the environment to obtain the maximum reward.We can require the model to indicate the source of knowledge when generating answers. This can prevent the model from fabricating knowledge that does not exist in the given data. At the same time, it can also improve our credibility of the answers generated by the model:

``py template_v4 = “““Use the following context to answer the last question. If you don’t know the answer, just say you don’t know and don’t try to make it up. case. You should make your answer as detailed and specific as possible without going off topic. If the answer is relatively long, please segment it into paragraphs as appropriate to improve the reading experience of the answer. If the answer has several points, you should answer them with point numbers to make the answer clear and specific. Please attach the original source text of your answer to ensure the correctness of your answer. {context} Question: {question} Useful answers:”””

QA_CHAIN_PROMPT = PromptTemplate(input_variables=[“context”,“question”], template=template_v4) qa_chain = RetrievalQA.from_chain_type(llm, retriever=vectordb.as_retriever(), return_source_documents=True, chain_type_kwargs={“prompt”:QA_CHAIN_PROMPT})

question = “What is the definition of reinforcement learning?” result = qa_chain({“query”: question}) print(result[“result”])

```markup Reinforcement learning is a machine learning method designed to allow an agent to learn how to make a series of good decisions through interaction with the environment. In this process, the agent will adjust its behavior based on feedback (rewards) from the environment to maximize the sum of long-term rewards. The goal of reinforcement learning is to make optimal decisions under uncertainty, similar to the process of letting a child learn to walk through trial and error. The interaction process of reinforcement learning consists of two parts: the agent and the environment. The agent selects actions based on the state of the environment, and the environment outputs the next state and reward based on the actions of the agent. Reinforcement learning has a wide range of applications, including game play, robot control, traffic management and other fields. [Source: Mushroom Book One Language Two Intensive Learning Tutorial].

Construct a thinking chain

Large models can often understand and execute instructions well, but the models themselves still have some limitations in their capabilities, such as the illusion of large models, the inability to understand more complex instructions, and the inability to execute complex steps. We can minimize its ability limitations by constructing a thinking chain and structuring Prompt into a series of steps. For example, we can construct a two-step thinking chain and require the model to reflect in the second step to eliminate the illusion of a large model as much as possible. question.

We first have such a Bad Case:

Question: How should we structure an LLM project

Initial answer: slightly

There are shortcomings: In fact, the content in the knowledge base on how to construct an LLM project is to use the LLM API to build an application. The model's answer seems reasonable, but in fact it is the illusion of a large model. It is obtained by splicing some related texts. question

question = "How should we structure an LLM project"

result = qa_chain({"query": question})

print(result["result"])

There are several steps to consider when building an LLM project: 1. Determine project goals and requirements: First, clarify what problem your project is to solve or achieve, and determine the specific scenarios and tasks that require the use of LLM. 2. Collect and prepare data: According to project needs, collect and prepare suitable data sets to ensure the quality and diversity of data to improve the performance and effect of LLM. 3. Design prompts and fine-tune instructions: Design appropriate prompts based on project requirements to ensure clear instructions, which can guide LLM to generate text that meets expectations. 4. Carry out model training and fine-tuning: Use basic LLM or instruction fine-tuning LLM to train and fine-tune the data to improve the performance and accuracy of the model on specific tasks. 5. Test and evaluate the model: After the training is completed, test and evaluate the model to check its performance and effect in different scenarios, and make necessary adjustments and optimizations based on the evaluation results. 6. Deploy and apply the model: Deploy the trained LLM model into actual applications to ensure that it can run normally and achieve the expected results, and continuously monitor and optimize the performance of the model. Source: Summarize based on the context provided.In this regard, we can optimize Prompt and turn the previous Prompt into two steps, requiring the model to reflect in the second step:

template_v4 = """ Please perform the following steps in sequence: ① Use the following context to answer the last question. If you don't know the answer, just say you don't know and don't try to make up the answer. You should make your answer as detailed and specific as possible without going off topic. If the answer is relatively long, please segment it into paragraphs as appropriate to improve the reading experience of the answer. If the answer has several points, you should answer them with point numbers to make the answer clear and specific. Context: {context} Question: {question} Useful answers: ② Based on the context provided, reflect on whether there is anything incorrect or not based on the context in the answer. If so, answer that you don’t know. Make sure you follow every step and don't skip any. """ QA_CHAIN_PROMPT = PromptTemplate(input_variables=["context","question"], template=template_v4) qa_chain = RetrievalQA.from_chain_type(llm, retriever=vectordb.as_retriever(), return_source_documents=True, chain_type_kwargs={"prompt":QA_CHAIN_PROMPT}) question = "How should we structure an LLM project" result = qa_chain({"query": question}) print(result["result"])

Based on the information provided in the context, there are several steps to consider in constructing an LLM project: 1. Determine project goals: First, clarify what your project goals are, whether you want to perform text summarization, sentiment analysis, entity extraction, or other tasks. Determine how to use LLM and how to call the API interface according to the project goals. 2. Design Prompt: Design an appropriate Prompt based on the project goals. The Prompt should be clear and specific, guiding LLM to generate expected results. The design of Prompt needs to take into account the specific requirements of the task. For example, in a text summary task, the Prompt should contain the text content that needs to be summarized. 3. Call the API interface: According to the designed prompt, programmatically call the LLM API interface to generate results. Make sure the API interface is called correctly to obtain accurate results. 4. Analyze results: After obtaining the results generated by LLM, analyze the results to ensure that the results meet the project goals and expectations. If the results do not meet expectations, you can adjust Prompt or other parameters to generate results again. 5. Optimization and improvement: Based on feedback from analysis results, continuously optimize and improve the LLM project to improve the efficiency and accuracy of the project. You can try different prompt designs, adjust the parameters of the API interface, etc. to optimize the project. Through the above steps, you can build an effective LLM project, using the powerful functions of LLM to implement tasks such as text summary, sentiment analysis, entity extraction, etc., and improve work efficiency and accuracy. If anything is unclear or you need further guidance, you can always seek help from experts in the relevant field.It can be seen that after asking the model to reflect on itself, the model repaired its illusion and gave the correct answer. We can also accomplish more functions by constructing a thinking chain, which I won’t go into details here. Readers are welcome to try.

Add a command parsing

We often face a requirement that we need the model to output in a format we specify. However, because we use Prompt Template to populate user questions, the formatting requirements present in user questions are often ignored, such as:

question = "What is the classification of LLM? Return me a Python List"

result = qa_chain({"query": question})

print(result["result"])

According to the information provided by the context, the classification of LLM (Large Language Model) can be divided into two types, namely basic LLM and instruction fine-tuning LLM. Basic LLM is based on text training data to train a model with the ability to predict the next word, usually by training on a large amount of data to determine the most likely word. Instruction fine-tuning LLM is to fine-tune the basic LLM to better adapt to a specific task or scenario, similar to providing instructions to another person to complete the task. Depending on the context, a Python List can be returned, which contains two categories of LLM: ["Basic LLM", "Instruction fine-tuning LLM"].

As you can see, although we asked the model to return a Python List, the output request was wrapped in a Template and ignored by the model. To address this problem, we can construct a Bad Case:

Question: What are the classifications of LLM? Returns me a Python List

Initial answer: Based on the context provided, the classification of LLM can be divided into basic LLM and instruction fine-tuning LLM.

There is a shortcoming: the output is not according to the requirements in the instruction.

To solve this problem, an existing solution is to add a layer of LLM before our retrieval LLM to realize the parsing of instructions and separate the format requirements of user questions and question content. This idea is actually the prototype of the currently popular Agent mechanism, that is, for user instructions, set up an LLM (i.e. Agent) to understand the instructions, determine what tools need to be executed by the instructions, and then call the tools that need to be executed in a targeted manner. Each of these tools can It is an LLM based on different Prompt Engineering, or it can be a database, API, etc. There is actually an Agent mechanism designed in LangChain, but we will not go into details in this tutorial. Here we only simply implement this function based on OpenAI’s native interface:

# Use the OpenAI native interface mentioned in Chapter 2

from openai import OpenAI

client = OpenAI(

# This is the default and can be omitted

api_key=os.environ.get("OPENAI_API_KEY"),

)

def gen_gpt_messages(prompt):

'''

Construct GPT model request parameters messages

Request parameters:

prompt: corresponding user prompt word

'''

messages = [{"role": "user", "content": prompt}]

return messages

def get_completion(prompt, model="gpt-3.5-turbo", temperature = 0):

'''

Get GPT model calling results

Request parameters:

prompt: corresponding prompt word

model: The called model, the default is gpt-3.5-turbo, you can also select other models such as gpt-4 as needed

temperature: The temperature coefficient of the model output, which controls the randomness of the output. The value range is 0~2. The lower the temperature coefficient, the more consistent the output content will be.

'''

response = client.chat.completions.create(

model=model,

messages=gen_gpt_messages(prompt),

temperature=temperature,

)

if len(response.choices) > 0:

return response.choices[0].message.content

return "generate answer error"

prompt_input = '''

Please determine whether the following questions contain format requirements for output, and output according to the following requirements:

Please return me a parsable Python list. The first element of the list is the format requirement for the output, which should be an instruction; the second element is the original question of removing the format requirement.

If there is no format requirement, please leave the first element empty

Questions that require judgment:

~~~

{}

~~~

Do not output any other content or format, and ensure that the returned results are parsable.

'''

Let’s test the LLM’s ability to decompose format requirements:

response = get_completion(prompt_input.format(question))

response

''``\n["Return me a Python List", "What is the classification of LLM?"]\n```'

It can be seen that through the above prompt, LLM can effectively parse the output format. Next, we can set up another LLM to parse the output content according to the output format requirements:

prompt_output = '''

Please answer the question according to the given format requirements according to the answer text and output format requirements.

Questions to be answered:

~~~

{}

~~~

Answer text:

~~~

{}

~~~

Output format requirements:

~~~

{}

~~~

'''

We can then concatenate the two LLMs with the retrieval chain:

question = 'What is the classification of LLM? Return me a Python List'

# First split the format requirements and questions

input_lst_s = get_completion(prompt_input.format(question))

# Find the starting and ending characters of the split list

start_loc = input_lst_s.find('[')

end_loc = input_lst_s.find(']')

rule, new_question = eval(input_lst_s[start_loc:end_loc+1])

# Then use the split question to call the retrieval chain

result = qa_chain({"query": new_question})

result_context = result["result"]

# Then call the output format parsing

response = get_completion(prompt_output.format(new_question, result_context, rule))

response

"['Basic LLM', 'Command fine-tuning LLM']"As you can see, after the above steps, we have successfully implemented the limitation of the output format. Of course, in the above code, the core is to introduce the idea of Agent. In fact, whether it is the Agent mechanism or the Parser mechanism (that is, limited output format), LangChain provides a mature tool chain for use. Interested readers are welcome to discuss it in depth. I won’t go into the explanation here.

Through the ideas explained above and combined with actual business conditions, we can continuously discover Bad Cases and optimize Prompts accordingly, thereby improving the performance of the generated part. However, the premise of the above optimization is that the retrieval part can retrieve the correct answer fragment, that is, the retrieval accuracy and recall rate are as high as possible. So, how can we evaluate and optimize the performance of the retrieval part? We will explore this issue in depth in the next chapter.

Evaluate and optimize the search part

The premise of generation is retrieval. Only when the retrieval part of our application can retrieve the correct answer document according to the user query, the generation result of the large model may be correct. Therefore, the retrieval precision and recall rate of the retrieval part actually affect the overall performance of the application to a greater extent. However, the optimization of the retrieval part is a more engineering and in-depth proposition. We often need to use many advanced advanced techniques derived from search and explore more practical tools, and even hand-write some tools for optimization.

Review the entire RAG development process analysis:

For a query entered by the user, the system will convert it into a vector and match the most relevant text paragraphs in the vector database. Then according to our settings, 3 to 5 text paragraphs will be selected and handed over to the large model together with the user’s query. The large model answers the questions posed in the user query based on the retrieved text paragraphs. In this entire system, we call the part where the vector database retrieves relevant text paragraphs the retrieval part, and the part where the large model generates answers based on the retrieved text paragraphs is called the generation part.

Therefore, the core function of the retrieval part is to find text paragraphs that exist in the knowledge base and can correctly answer the questions in the user query. Therefore, we can define the most intuitive accuracy rate to evaluate the retrieval effect: for N given queries, we ensure that the correct answer corresponding to each query exists in the knowledge base. Assume that for each query, the system finds K text fragments. If the correct answer is in one of the K text fragments, then we consider the retrieval successful; if the correct answer is not in one of the K text fragments, our task retrieval fails. Then, the retrieval accuracy of the system can be simply calculated as:

$$accuracy = \frac{M}{N}$$

Among them, M is the number of successfully retrieved queries.

Through the above accuracy rate, we can measure the system’s retrieval capabilities. For the queries that the system can successfully retrieve, we can further optimize the prompt to improve system performance. For queries whose system retrieval fails, we must improve the retrieval system to optimize the retrieval effect. But note that when we calculate the accuracy defined above, we must ensure that the correct answer to each of our verification queries actually exists in the knowledge base; if the correct answer does not exist, then we should attribute Bad Case Moving to the knowledge base construction part, it shows that the breadth and processing accuracy of knowledge base construction still need to be improved.

Of course, this is just the simplest evaluation method. In fact, this evaluation method has many shortcomings. For example:

- Some queries may require combining multiple knowledge fragments to answer. How do we evaluate such queries?

- The order of retrieved knowledge fragments will actually affect the generation of large models. Should we take the order of retrieved fragments into consideration?